Sea Change Coming in Asset Management Computing

It’s been a wild ride since June 1977 when the Apple II was released and VisiCalc launched the spreadsheet revolution. Business information, from accounting to job costing and estimating, is no longer produced by hand. Maintenance work orders are automatically generated right on schedule, and data bases have replaced the old drawer files we used for machine histories. It is a different world: Complex and efficient systems are implemented using competent software, and, for better or for worse, we no longer have the flexibility to do it “my way” using pencil and paper.

The change to computerized systems has gone well in areas where generally accepted principles are established and where the former pencil-and-paper processes were well understood and well structured. It has not gone well in areas where there are no generally accepted principles and where we struggle to define the information we need to improve decision making. It is relatively easy to computerize an accounting system because everyone knows how a balance sheet is structured and how a profit-and-loss statement works. It is extraordinarily difficult to implement a fleet-management system because there are no generally accepted definitions for basic metrics such as reliability and availability and we are still learning how to collect, integrate, and use all the information we need to make good repair, rebuild, replace decisions.

There has, undoubtedly, been progress. Change and innovation is a constant, and every day produces a new software release somewhere. Every day brings improvement to the way we do our computing. Despite all that has been done, we are currently experiencing a sea change in the way in which data is collected, integrated, and used across the organization. We have learned that it is no longer good enough to review and analyze the past: We need the ability to look forward and learn about the future.

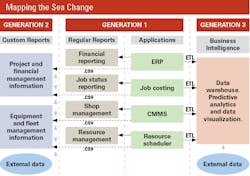

The diagram above attempts to show where we have been and illustrate the change I believe is underway. Let’s look first at the two central columns under Generation 1.

I define Generation 1 computing as the implementation and use of discipline-specific applications that focus on a given discipline or silo in the company. The enterprise resource planning (ERP) application can integrate purchasing, inventory, finance, and human resources, but it is essentially an “office” or accounting application that produces financial reports together with the audit trails and other requirements needed to meet accounting standards. The job costing application focuses on job status reporting; the computerized maintenance management (CMMS) application computerizes machine histories, work orders, and other shop management tasks. The resource scheduling application helps to plan and schedule the movement of resources as they go from job to job. Although the diagram shows four of these applications, there are many other silo applications used by different parts of the organization on a daily basis.

It will be possible to combine machine health information with factors needed to develop cause-and-effect relationships

Generation 1 computing and, if you wish, the automation of silo-specific tasks has been our focus in the past. Competent software applications exist, and we seldom work with pencil and paper. The software produces the regular reports required within the business silo, and the standardized formal processes brought about by implementing competent software certainly improves performance.

Most companies are solidly into Generation 1, but there is a lot still to do—especially when it comes to field data collection and mobile access to required information.

Generation 2 computing is shown on the left as a natural outgrowth of Generation 1. Companies do not function in silos, therefore much is achieved when information is shared and common knowledge is built between them. This is done by producing custom reports that share data found in two or more of the silo-specific applications. The reports are customized, in most cases, because they do not reside in a given silo-specific application and the required data frequently need to be exported to a reporting system using an application programming interface or flat .csv-format files. It certainly is possible to build a customized utilization report by exporting hours worked from the job-costing application, hour meter readings from the CMMS application, and location data from the telematics system; but it is complex, labor intensive, and error prone. We would also have entered the world of dueling data and spreadsheet wars.

As with Generation 1, Generation 2 computing has made great progress. Leading software developers have built eco systems of matched and, in some cases, integrated applications, and many data transfer problems have been solved. Many companies are well into Generation 2 computing and are certainly seeing the benefits of exporting and sharing data between applications such as accounting and job costing as well as between the CMMS and the resource scheduling system.

The constant call for more data integration and more business intelligence based on a single source of truth probably means that customized reports based on data exports and imports is unlikely to be a long-term solution. Many believe that Generation 2 computing has a limited future.

This brings us to Generation 3 and the sea change. The right-hand side of the diagram shows this as a process whereby data is transferred on a regular basis from the various business applications to a companywide data warehouse using specialized data extraction, transformation, and loading (ETL) software. The silo-specific applications continue to function without change, and the regular reports of specific value to each discipline continue to be produced. The ERP software continues to do everything needed to satisfy accounting standards: the job-costing application continues with regular job status reports, and the CMMS software continues to manage work orders and machine histories. The ETL software runs behind the scenes to maintain a data warehouse that provides a close-to-real-time single source of truth for the predictive analytics and data visualization tools used to improve business intelligence.

Knowing that a machine has sent a series of alerts is not good enough. Generation 3 computing makes it possible to combine machine health information with operator name and history, current and past job phase codes, cost trends, component life, recent oil analysis, and many other factors needed to develop the cause-and-effect relationships that improve decision making. Generation 3 computing gives us less than obvious insights into our business and enables us to look forward rather than backward when deciding what to do.

Software to perform the ETL routines, manage the data warehouse, and produce the required outputs is becoming increasingly robust and available. Construction companies are following other sectors and implementing ETL and data warehouse tools to improve their competitive advantage.

Equipment management has much to benefit from the continued deployment of Generation 3 computing. Last month, (“Attack the Causes of Cost”), we identified four critical success factors: Know your cost, focus on utilization, measure reliability, and manage age. The data needed to build quantitative tools that measure success in these four areas is unlikely to be found in any one silo-specific application. We have made some progress in our quest for better information by developing data export and import routines and by building custom reports. We will need to take a more ambitious long-term view and move solidly toward Generation 3 computing before we can unlock the full potential of the data we have and the predictive analytics techniques currently available.

For more asset management, click here.